Trality Outage on 27/11/2020

CHRISTOPHER HELF

02 December 2020 • 3 min read

Table of contents

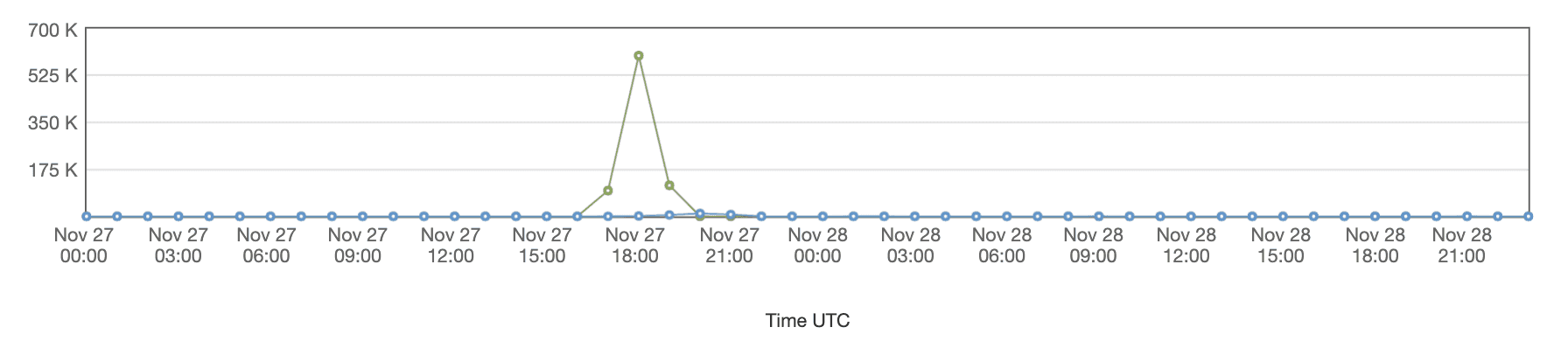

On the 27th of November 2020, our platform was targeted by a bot network resulting in over 700k requests being sent in a very short period of time.

All requests pointed at the /auth/token endpoint of our OAuth 2.0 authentication service with the attacker(s) attempting logins with usernames and passwords from what we assume were gathered from password-leaks. Our team managed to respond very quickly and took the authentication endpoint offline as a security precaution to give us more time to analyse the pattern of the incoming traffic in depth. As a result, our platform was not accessible to users for a period of around 5 hours until we had the attack under control, however, user data was not impacted or leaked. We're writing this blog post as transparency and clear communication with our community are core values of Trality and we want our users to know how we constantly improve our systems so that we can handle incidents like this more efficiently and quickly in the future.

Detailed Timeline

All times are GMT+1.

- 2020-11-27 17:00 - We're seeing a strong spike in number of requests to our platform

- 2020-11-27 17:15 - The first responder team analyses the traffic and starts the investigation

- 2020-11-27 17:30 - As a security precaution, the authentication service is taken offline as requests are solely pointed at that endpoint

- 2020-11-27 18:30 - Cloudfront and WAF configurations are improved to better understand traffic patterns attempts at blocking are underway (e.g. Rate-limits are drastically lowered)

- 2020-11-27 19:30 - Sweden as a country is blocked as all requests originate from there, which turns out only to be a temporary solution as the attackers switch to different countries quite fast

- 2020-11-27 20:00 - More restrictive WAF rules are applied at specific patterns we're seeing based on the requests, and we manage to block the majority of requests now

- 2020-11-27 23:00 - WAF rules are now blocking all requests of the attacker successfully

- 2020-11-27 23:30 - First attack wave stops, Authentication Endpoint is taken online and the team is doing tests whether regular traffic is impacted

- 2020-11-27 24:00 - Service is back online

- 2020-11-28 01:00 - Second attack wave begins, WAF rules are still in place and block all requests of the attackers

- 2020-11-28 01:15 - Second attack wave stops

Internal Impact

Our internal system was briefly affected by the attack due to our logging system.

Given the large number of requests, our centralised logging system went to 100% CPU utilisation, which in turn led to timeouts in some of the services that tried to report both metrics and logs. The team had to issue a number of hotfixes to get the internal system running again even after we disabled general public access to our platform.

External Impact

During the attack, our service was not reachable for a period of roughly 5 hours. User data was not impacted, as we only store hashed passwords and exchange credentials are stored in way that neither users themselves nor we as administrators have access to them once they are stored. We advise users to check whether their email address was part of a breach in another system using e.g. https://haveibeenpwned.com/ and change passwords should they find their email in a leak.

Improvements made

Our biggest takeaway from this incident is that we didn't configure our public endpoints restrictively enough. We had very large rate limits in place that our users will never reach, thus enabling the attackers to utilise that angle to send the number of requests we saw from a large IP-pool. We're also talking to AWS as directly to get stronger and better rules in place that will mitigate future issues like this even better. Another important learning is that we need to improve isolation of our internal services, e.g. our entire bot system, so that no public endpoint can have an indirect impact on internal critical services that we run. We're currently working on separating the two subsystems entirely. We're also planning to improve identity verification measures using e.g. MFA and others soon.

Conclusion

We're still a small team in our company and we’ve never experienced an attack like this before. Even though our team responded quickly, the time during the attack was painful for everyone involved as we knew that our customers were impacted. We are sorry for the disruption we have caused to our customers while the attack was happening.

We're in the process of improving our systems to being able to handle attacks like this in a better way, and we're developing a company-internal protocol on how we handle these things and improve communication to our customers as we really want to be fully transparent about every aspect of our system.